- The killswitch is what it essentially hinges on. An unregistered domain in the code

- the encryption and further execution stops if the domain specified in code is resolved and a http connection established.

- the killswitch domainname in the wannacry code has changed which means sinkholing just one or two domain names will not work

- so what if we could resolve all unregistered domains to a honeypot.

- DNS by nature cannot be gamed to do this as it will cause havoc.

- Maxmind GeoIP has a domainame database

- write a small dns server drop in replacement which uses a local copy of this database

- the drop in dns server sits infront of actual organization dns server

- checks domain name in the maxmind db.

- if found in db lets request pass on to actual dns or replies

- if not found our drop in dns server replies with a honeypot IP

- honeypot IP is running a http server and allows the http connect

- http connect happens and killswitch is activated. Wannacrypt/wannacry stops execution

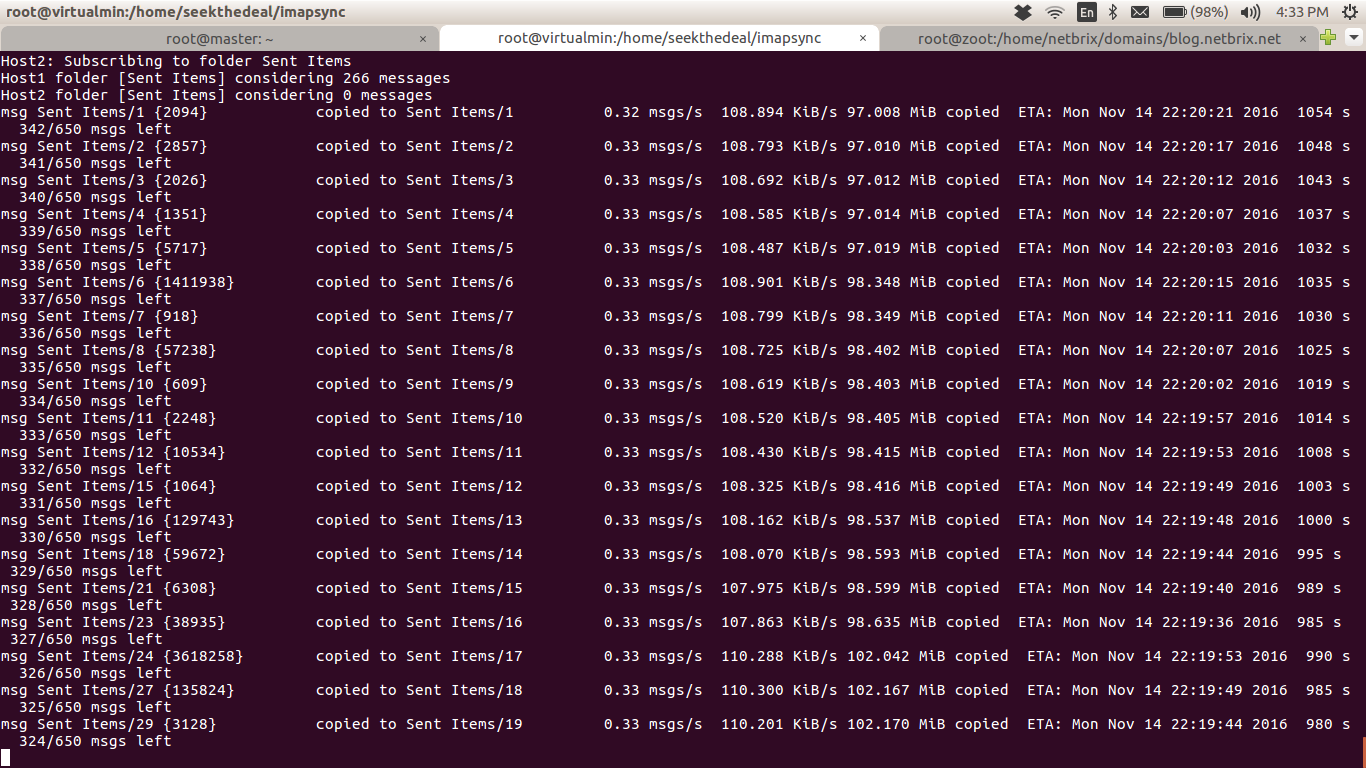

copying email across hosts

Often times, during server migrations across control panels (or in the absence of control panels), one needs to copy email which the client wants to preserve. IMAP allows us to be lazy and leave all our years of communication online on our servers. So when a client insists that they need their email moved you’re left with the supremely boring task of setting up the two imap accounts in an imap client (thunderbird or outlook), one from old server the other from the new one, downloading all mail from old server then dragging across all the folders to the new server. Extremely slow and frankly frustrating…..

Enter imapsync (well it’s been around for 3 years at least maybe longer).

It doesn’t matter where you install imapsync as you’re going to be passing the hostnames of source and destination to the tool. If you’re on CentOS, get the EPEL repository by running

yum install epel-release

Next setup imapsync by running

yum -y install imapsync

This will setup the dependencies etc. and you should now proceed to the actual mail copy.

For the purpose of clarity we are going to be copying the mails from server1 to server2. Just for the sake of structure and discipline make a directory for each account you are going to synchronise. In this folder make two files, pass1 and pass2

echo “passwordold” > pass1

echo “passwordnew” > pass2

now run the command that does it all:

imapsync –host1 server1 –user1 <username_old> –passfile1 ./pass1 –host2 server2 –user2 <username_new> –passfile2 ./pass2

Now just sit back and relax as the script logs into both servers, figures out what all folders to subscribe to on the source server, displays the counts and then starts the copy process.

For a decenly well maintained mailbox it should be done in under 30 minutes. The messages can be suppressed or leave them on they tell you where it is in the copy process. Finally you get a nice summary of what went down:

Start difference host2 – host1 : -650 messages, -205293825 bytes (-195.783 MiB)

Final difference host2 – host1 : -12 messages, -1403319 bytes (-1.338 MiB)

Detected 0 errors

If you login to your mail client on the new server you should see all the mail from your old server.

Slave rebuild — out of sync MySQL replication

Sometimes you may end up in a situation where the slave server is out

of sync and relication is halted due to a table crash on the slave o

similar situation. The best way to recover from an out of sync slave

is to rebuild the slave. There are ways to try and fix the table but

lets just say YMMV and for all you know a rebuld would work out

cheaper (in terms of time).

To rebuild follow these steps.

On the master database at the cli run these:

RESET MASTER;

FLUSH TABLES WITH READ LOCK;

SHOW MASTER STATUS;

This resets the replication counter on the master server. The reason

we do this is because we will be taking a snapshot of the master

database and then restoring it to the slave so technically this is

going to be the initial stage for the slave server and this is from

where all subsequent updates to master need to be applied to slave.

Also all tables are being locked for writing so that no inserts,

deletes, updates are carried out on the database while the database

snapshot is made. The last command will output something like this:

mysql> show master status;

+——————+———-+————–+————————————————–+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB

|

+——————+———-+————–+————————————————–+

| mysql-bin.000001 | 107 | |

information_schema,performance_schema,mysql,test |

+——————+———-+————–+————————————————–+

1 row in set (0.00 sec)

The position field and the file name of the binary log is what we need

to be careful to note down and keep safe as it will be used later on

in this process. Basically right now the database is locked and no

writes are happening so there is nothing being written to the bin log

which means that the log position, in this case 107, is where we will

be taking our database dump from. Ergo once the write lock on tables

is removed the position counter will start moving, however the slave

needs to be told that this is the position from where you start

reading the bin log file (specified by the File field in the above

output) for the purpose of mirroring all writes to the master db.

So yes we have our database write protected and sitting there. Savor

the moment as all the associated application sweat it out wondering

why they cannot get write locks on the tables they wish to write to.

Done, now start a backup of the databases from master.

mysqldump -u -p” –opt –single-transaction

–comments –hex-blob –dump-date –no-autocommit –all-databases >

dbdump.sql

(NOTE: The mysqldump should be done from a second ssh terminal as

closing out of the cli will release the lock on the tables.)

This will dump all the databases to a single sql file (in my case,

last night, that turned out to be 40Gb file). The other options are to

allow simultaneous read locks on innodb tables so that applications

can still read data, to add comments to the dumpfile for better

readability (your choice really), dumping binary coloumns using

hexadecimal notation, ensuring that the inserts are committed in a

batch for faster execution.

You can unlock the tables as soon as the mysqldump starts, to do so

run the following from the mysql cli (where you did the reset)

UNLOCK TABLES;

Once the mysqldump completes copy your sql file to the slave server

using rsync, scp or whatever you prefer.

IMPORTANT: Now we are on the slave server.

First of all on the slave we need to stop replication by running:

STOP SLAVE;

a nifty trick at this point would be to turn of binary logging if it

is on. This would speed up your import by up to 3 times (again that’s

a unmetered metric YMMV but suffice to say you will see a huge speed

improvement if you turn of bin log).

SET sql_log_bin=0;

now start the import and go get a shower, walk the kids, go grocery

shopping with the wife, watch a movie, catch a nap or all of the above

(a 40G restore would easily take 3 hours to complete). You can use

onne of many ways to import a mysqldump I prefer doing it from the cli

using:

SOURCE dbdump.sql;

Once the restore to slave is complete you might want to, just to be

sure, run a CHECK, REPAIR on your tables. If it throws up any errors

this would be a good time to fix those errors. In my case three large

tables failed to restore so basically I had two options viz. figure

out how to fix the problem or redo the

reset/lock/dump/unlock/scp/restore routine. I chose to do the figuring

out how to fix this situation.

With mysqldump files, specially with that of all databases on a server

it is practically very difficult to restore just one table. You can do

it but you would never be sure if it happened right till the

replication kicks in and proceeds without a hitch and even then you’d

have slogged and sweated a lot. How I did it was pretty simple in the

end actually. In my case the tabes were crashed and well practically

not there (stuff like that happens when you have a slave replication

running for 1.5years…. one of the reasons why I think a test restore

from slave once in a while is a very good idea and why a server reboot

once in a while would not hurt anything except arrogant serveradmin

egos tied to uptimes of 500+ days…. in my case the server had not

been rebooted in 559 days and then suddenly it was and the mandatory

fsck on reboot muffed up these three tables on slave). So I copied the

structure from master (not a big deal but if you must):

SHOW CREATE TABLE ; //from the master

The resultant output will give you the statement to create the table,

copy that and run it on the slave.

CREATE TABLE (…..);

Next comes the genious bit. Take the 40G mysqldump file and look for

the INSERT statement that are puting the data into the .

Write those out to a separate file. open this file and carefully

eyeball it to make sure that no other inserts are being inadvertently

added (as in your regular expression is good, basically:

grep INSERT dbdump.sql | grep tablename > tablename.sql

NOTE: be very careful about the eyeballing of the above table. Maybe

run something like this as well:

grep INSERT tablename.sql | cut -d”(” -f1

The above will throw something like this:

INSERT INTO `cromagnum` VALUES

INSERT INTO `cromagnum` VALUES

INSERT INTO `cromagnum` VALUES

INSERT INTO `cromagnum` VALUES

INSERT INTO `cromagnum` VALUES

INSERT INTO `cromagnum` VALUES

INSERT INTO `cromagnum` VALUES

INSERT INTO `cromagnum` VALUES

INSERT INTO `cromagnum` VALUES

INSERT INTO `cromagnum` VALUES

INSERT INTO `cromagnum` VALUES

INSERT INTO `cromagnum` VALUES

INSERT INTO `cromagnum` VALUES

INSERT INTO `cromagnum` VALUES

INSERT INTO `cromagnum_featured` VALUES

INSERT INTO `croquet_messages` VALUES

INSERT INTO `croquet_messages` VALUES

The last three inserts is what I was talking about (it could be

avoided by making a better regex but when you’re in a hurry it’s

easier to keep things simpler). At this point edit the tablename.sql

and remove the inserts to other tables. Why are we doing this and not

letting replication take care of it? Well replication would not start

if it finds these tables missing for one, and then there is the thing

about the reset which we did, all data prior to that point in the bin

log would be lost because we’re not restoring it to the slave. So yes

this acrobatics is necessary. Copy the tablename.sql to the slave and

restore it using:

USE databasename;

SOURCE tablename.sql;

So now your database is restored to the point where we took the

mysqldump. However now we need to start replication as the slave has

to catch up and then keep up to the master. At this point I ran a

mysqlcheck -c just to once again make sure that all databases and

tables were in ship shape before I put them back in business. Once

that completed (in about two hours) I started the slave by telling it

which binary log from the master to look at and from what position the

replication needed to start. This is where the “SHOW MASTER STATUS”

output comes in handy

RESET SLAVE;

CHANGE MASTER TO MASTER_LOG_FILE=’mysql-bin.000001′, MASTER_LOG_POS=107;

START SLAVE;

That’s it slave should catch up to the past 8-10 hours that master was

happily purring away powering the websites/application.

You can look at the slave status to confirm all is well by running

SHOW SLAVE STATUS\G;

The things to look for are:

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

and

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Another quick and dirty way to verify that you data on slave is

syncronized is to compare the row counts on all tables in a particular

db.

SELECT table_name, TABLE_ROWS FROM INFORMATION_SCHEMA.TABLES WHERE

TABLE_SCHEMA = ”;

run this on both slave and master and compare the numbers.

If you run into any problems with this process or if you have any

queries please feel free to hit me up at imtiaz at netbrix dot net OR

leave a comment and I’ll try to help. Try and do periodic tests of

your slave servers integrity and also the integrity of your drives.

You don’t want to be caught in the middle of a fsck ruining your

happiness specially if it’s business critical data.

Online Radio – Howto

Here’s a howto for setting up your own online internet radio station. This was done on a CentOS 6.2 platform but it’s pretty much the same for others as well. Most of the installs are source compiles, yum has been used for some libraries you could use your favourite package manager on your server.

Config files and installation service are available on request. We will be refining this project over time adding much much more funtionality and software to the web player. for now enjoy this player here:

Prepare the server for greatness

- yum -y install bzip2-devel ncurses-devel aspell pspell expat-devel gmp-devel freetype-devel flex-devel ruby-libs ruby gd-devel subversion libjpeg-devel libpng-devel gcc-c++ gcc-cpp curl-devel libxml2-devel libtool-ltdl-devel httpd-devel pcre-devel libc-client-devel unixODBC-devel postresql-devel net-snmp-devel libxslt-devel sqlite-devel readline-devel atop htop pspell-devel

- cd /home/imtiaz/src/; wget http://space.dl.sourceforge.net/project/lame/lame/3.98.4/lame-3.98.4.tar.gz

- tar -zxf lame-3.98.4.tar.gz

- cd lame-3.98.4

- ./configure

- make

- make install

- yum install libshout-devel, flac-devel, perl-devel, python-devel, libmp4v2-devel

add a user which will be used to run everything

- useradd ice

- passwd ice

start working on the streaming server setup as the user from above

- su – ice

- mkdir src

- cd src

- wget http://downloads.xiph.org/releases/icecast/icecast-2.3.2.tar.gz

- wget http://www.centova.com/clientdist/ices/ices-cc-0.4.1.tar.gz

- tar -zxf icecast-2.3.2.tar.gz

- tar -zxf ices-cc-0.4.1.tar.gz

setup icecast

- cd icecast-2.3.2

- ./configure –prefix=/home/ice/srv

- make

- make install

build a local copy of flac 1.1.2(or lower) as ices wont work with latest versions of flac

- cd ..

- wget http://downloads.sourceforge.net/project/flac/flac-src/flac-1.1.2-src/flac-1.1.2.tar.gz?r=http%3A%2F%2Fsourceforge.net%2Fprojects%2Fflac%2Ffiles%2Fflac-src%2Fflac-1.1.2-src%2F&ts=1338063039&use_mirror=space

- tar -zxf flac-1.1.2.tar.gz

- cd flac-1.1.2

- ./configure –prefix=$HOME/srv

- make

- make install

build ices

- cd ../ices-cc-0.4.1

- make distclean

- ./configure –prefix=/home/ice/srv –with-flac=/home/ice/srv

- make

- make install

work on the housekeeping

- export PATH=/home/ice/srv/bin/:$PATH

- cd srv

- cd etc/

- vi icecast.xml

- cd

- mkdir -p /home/ice/srv/var/log/icecast

- chmod -R 755 /home/ice/srv/var

Launch icecast

- /home/ice/srv/bin/icecast -c /home/ice/srv/etc/icecast.xml -b

(-b sends it to the background, icecast.xml will need to be editted for passwords etc.)

playlist setup and directory structure for music files and playlists

- cd

- mkdir media

- cd media

- mkdir pls

- cd ~/srv/bin

- vi mp3list

- cd ~/media

- cd music

##(remove spaces from name of songs)

- find . -name ‘* *’ | while read file; do target=`echo “$file” | sed ‘s/ /_/g’`; echo “Renaming ‘$file’ to ‘$target'”; mv “$file” “$target”; done;

- cd ~srv

- cd bin

- chmod 755 mp3list

##(generate the playlist)

- ./mp3list ~/media/music/1 1

(write the config file for the stream)

- cd ~/srv/etc/

- mkdir stubs

- cd stubs

- vi 1.conf

Launch ices

- cd

- srv/bin/ices -c srv/etc/stubs/1.conf

Create the webpage for the demo embedded player

- su –

- cd /var/www/vhosts/default/htdocs/

- mkdir radio

- cd radio

- vi 1.htm

- chmod 755 radio -R

That is it, your radio is online and you can tune in by going to the mountpoint you setup in the ices configuration you used. If you need assistance with this setup please mail us on R at NetBrix dot net. Lots of improvements on the way in the meanwhile enjoy this online radio channel

mod_geoip + php-cgi

quick note to self…. mod_geoip, more precisely apache_note would not work when php is working as a cgi handler instead of being a apache module. Instead use getenv()

example:

instead of apache_note("GEOIP_COUNTRY_NAME"); use getenv("GEOIP_COUNTRY_NAME");